Sorry for the delay here. Just wanted to make a quick clarification on the $0.25 per tonne is only for third party verification for data going through COMET (for the first pilot project with excellent data records). What we did to get to this number is divide verification costs by the total number of NRTs (Nori Removal Tonnes) for our first sale. $0.9-5.1 for direct measurement would be on top of this. Our understanding is that the 90 cents to $5.10 is for installing/maintaining/managing the sensors and data to come up with the estimates. For a typical project in Nori it would include both these costs.

Hey guys, just wanted to share a brief post explaining how are we using remote sensing form satellites in order to build accurate top soil carbon maps in managed grasslands in Australia (10m-pixel resolution) : Regen SOC from satellites

I think new constellations like the open Sentinel one can help to significantly reduce costs of sampling and increase accuracy. Happy to read all this conversation! great information and discussion!! So impressed by the alignement we are all having regarding what´s missing and what are the most important gaps

Hi Giselle - I read your article, thanks for organizing things and doing some awesome work! I feel like you’re spot on in that this strategy focuses identifying differences over time, rather than absolute quantification or comparison over space. That reduces complexity immensely.

@DanT @kanedan29 I’d be curious to get your thoughts on the article and their approach… how do compelling is that in terms of the two big picture use cases (management recommendations from soil carbon only and carbon stock estimates for creation of credits like Nori).

Also, Giselle, you should talk to Platfarm, who’s based on Australia and has farm management software for grape growers, and specifically has soil sampling as part of their work for ground truthing or other partnerships.

great Greg thanks, I´ll catch up with them. Happy to hear comments on this from others as well as this is just a first insight but I think the potential is huge.

@gbooman Thanks for sharing this article. I agree that this approach can significantly reduce sampling costs. I have a couple of questions: 1) Did you do any ground-truthing to test the interpolated area’s on the farm? 2) I am assuming that this approach would require a training set (sampling) for every year/SOC quantification?

Hey Dan,

ok so not sure about what interpolated areas you refer to. I just found a relationship (inverse power regression) for each year, and so I used the equation of those regressions to translate the band 4 measurements into %soc. pixel by pixel (through GIS calculator). Then I used an interpolated map of bulk densities for converting %soc into tonnes, but that’s another story… (I would like to see if there 's a relationship between spectral and or radar images to bulk densities, too, so we can use the same procedure than for the %soc to build bulk densities maps, not there yet, but an interpolated map is not the best…). So, yes, we need a minimum sample to calibrate for each year and then build the map. But…what about this. The relationship for 3 of the 4 years has a parallel curve and the lateral displacement seems to be direclty related to antecedent rain /moisture and temperatures. So my guess is that after having at least 5 years of correlated data I can account for a minimum climate variability and thus analyze the influence of climatic (temperature and historical rain or moisture ) variables as explanatory variables (factors) for most of these interannual differences. If I can distill that, and find the right correction factors for climate, then we can build a model for every year based on band 4 + climatic variables alone to monitor %SOC. And just have some observatory points in the landscape for verification of calibration … The key here is to find the right variables. Wether is it accumulated mm rainfall in the past 6 weeks. 2 months, 6 months…, is it accumulated temperatures above 25 degrees or in the last 2 months, or average monthly temperatures (but we know higher temperatures are the ones afecting carbon losses )… hopefully I’ll be able to try this out this year, we will see. Ideas and thoughts very welcome ![]()

@gbooman thanks for the share. As a former geo intelligence analyst, I’m very excited to see how remote sensing can be put to good use in this way. And I have it in my TO DO list to try setting up a test-net node for Regen, hopefully I can get to that before the month is out…

I am chatting with my old startup (Terravion) about some part-time contracting work with them. They offer high-res aerial sensing, primarily for Ag. From my last chat, they had run a pilot project last year with a hyper-spectral sensor, with some soil-health/carbon-content applications. For me, getting a good multi-source mix of data sets, from satellites, aerial collection and on-ground would lead to the optimal balance in cost and effectiveness for wide-scale SOC analysis. I want to put my shoulder to this concept and push towards that, if I can find some purchase.

Hi everyone! As I shared before, I am working in some pilots with Regen Network, correlating sentinel data to %SOC from top layer, with great results so far. We are going to try this out in other farms and try to scale this up, at least for temperate grasslands. Some questions/ findings here that might be of your interest:

-

How should %SOC be converted into carbon stocks (ton/ha) ? I ve been geting into the weeds of the Equivalent Soil mass method and all the discussions around fixed mass versus fixed depth. I really would like to hear your thoughts around this. For instance, which is the carbon metric you are using for calibrating sensors @gbathree? Is it also % SOC? I think that if %SOC highly correlates to satellites and it is simple to measure, that allows for building maps and quantifying the carbon variability in the whole farm and so the accuracy is increased a lot when estimating carbon at the parcel level, with a minimum effort. But having to sample bulk density increases the sampling error itself and also, as the bulk density would be sampled just at sampling points and then a spatial interpolation would be needed (which is very different to correlating to satellites and building spatially explicit models or maps), this increases the error when converting %SOC from each pixel to carbon stocks. How much is this total error increase, versus using an average bulk density for the soil type and ecoregion?.. I don´t know. But I know all this bulk density sampling increases complexity of sampling, reporting and costs for the farmer… thoughts? @kanedan29 ? ( @plawrence and @Gregory FYI opening this discusion now…).

-

Also, regarding sampling stratification, given this correlation between some sentinel bands and the %SOC was found for several years over two different farms in New South Wales, I have been using the most correlated band as a proxy for stratifying and locating the samples in a new area were we are going to sample soon. Land cover is similar in this new site, mainly grasslands with different land management history. Just wanted to share this because I think it´s a very useful outcome from this correlation to satellites, for reducing sampling costs. You can analize in GIS the behaviour of the histograms of the polygons from a pre-stratification and identify those with the greater variability, or wider range of data , and those that behave normal… Also, locate the higher and lower carbon pixels (presumably) within each chosen polygon, in order to be sure to cover the full range… things like that. So, (a) you know the bands that correlate in a similar or nearby area, and how the relationship is expected to be (wheter direct or inverse, linear or not); (b) you analize the pixel values qualitatively (nothing is calibrated yet for that specific piece of land); (c) design an optimized sampling according to this qualitative data, and (d) sample, analyze data, correlate to satellite imagery for same date, calibrate, determine carbon stocks… what do you think?

-

I´d love to try this out with calibration from our-sci sensors…

Hi Gisel,

I’ve been meaning to hop in on this thread for a bit so thanks for @'ing me! A few thoughts below:

-

We’ve had similar success in estimating SOC from remote sensing data in QC, and there’s a lot of work on estimating SOC via digital soil mapping using different spatial ML processes. But I’m not entirely confident it’s a process that will work for the problems we’re trying to solve. In Figure 3 of your post you generate three different models for each of the different years. If Sentinel b4 were a reliable predictor of exact SOC values across years then this model shouldn’t change year-to-year. Instead, I think what this is demonstrating is that b4 is nicely correlated with SOC within years. That doesn’t mean it’s not useful, but you wouldn’t be able to rely on b4 alone, or per Dan T’s points, you’d have to train the model anew every year. I think digital soil mapping methods can work really well to set baseline expectations of variability, but to then pick up on more minor differences that are the result of management, local measurement of some kind might be necessary.

-

Looking at figure 5 in your original post, those are some really substantial delta SOC values on that map. I’d be really, really surprised if changes that substantial occurred in such a short period of time in the top 15 cm. For comparison, this paper from a grazing study in GA showed an annual increase of 8 tons per hectare in the top 30 cm - http://www.nature.com/doifinder/10.1038/ncomms7995. To do a worked example with your data - assume a bulk density of 1 g/cm3 that doesn’t change over time and 4% SOC (roughly the mean at your site). That would equal about 60 tons/ha, so a 50 ton/ha increase is nearly doubling that in a year. That’s arguably not possible. I think what you’re seeing there is a result of the fact that this kinda method can’t deal with inter-annual variability.

-

Re: %SOC to tons/ha. I think fixed mass is the better method unless you’re sampling past the B horizon and can ensure you’re basically getting a comprehensive profile. All the Quick Carbon sensor calibration work is on %SOC because that’s what we’re intending to measure with the sensor. Then we’d similar scale to tons/ha as if you were using lab methods. So yep, bulk density presents complications and a huge potential error term in calculations. Lots of papers on it lately, but how to account for it in a manner that doesn’t massively increase costs is an unresolved issue.

-

I definitely think you’re right that b4 could be a good stratification input! Check out conditioned latin hypercube sampling as an option for taking that data as an input and then generating a series of points that might best capture variability of b4 and, hence, SOC.

@kanedan29 great inputs!!! thanks! I will reply each point below:

DK: If Sentinel b4 were a reliable predictor of exact SOC values across years then this model shouldn’t change year-to-year. Instead, I think what this is demonstrating is that b4 is nicely correlated with SOC within years.

GB: You are right in that. So far, I can only expect to use this to reduce sampling costs and be able to get a reliable map of the whole surface instead of just points, year by year. So in the best case, what I envision in the near future is that as a minimum number of fixed points or observatories across the whole landscape could be enough to callibrate a whole image, then the points sampled per farm needed are reduced as more farms enter the system. But, also I don´t discard being able to build a good model to account for interannual variability, too. I think this shift between years in the models might be explained by simple climatic variables. I am willing to have at least one more year sampled, in order to test the explanatory variables that could be affecting this. I guess accumulated temperature along with moisture (need to test several variables, for instance, accumulated rainfall in the past 3 months, 6 months, 2 weeks… accumulated temperature above 25 degrees, or is it total accumulated temperature, in which period…). That could allow to build a correction factor for the reflectance data to account for the interannual variability.

DK: Looking at figure 5 in your original post, those are some really substantial delta SOC values on that map. I’d be really, really surprised if changes that substantial occurred in such a short period of time in the top 15 cm.

GB: You are pointing exactly to the issue that forced me to review this whole thing. Yes, I also think it´s not possible. So, I´m going to get into some details regarding the methodology. First, the maps of % SOC were built each year based on the model from that year. Also, as the maximum %SOC found in the samplings (from the lab tests) was close to 7%, I used that as a top value in order to be conservative (because I don´t know how the relationship is beyond that value). So, every pixel has a value between 0 and 7% , each year. I think the source of error doesn´t come from that part, because the model fits very well and there´s this top value of 7%. Then the problem came when I used bulk densities to convert %SOC to stocks in GIS (map algebra)… I just spatially interpolated the bulk densities from 2019, and used that single interpolation for every year to do the algebra at the pixel level (because we don´t have bulk densities from previous years). I think that´s a big source of error, because we are assuming constant bulk densities between years and also with a lot of spatial variability and errors from the interpolation. I guess it would be better to just use an average bulk density for that zone. That if I stick to the fixed depth method, and not the ESM. Also, I am now checking with the farmers, because some fires or something like that might have happened in some parcels in certain year, that I am not considering. Probably more bands should be used in the model (maybe using ML) in order to mask out any pixels showing extreme values indicating things like that (fire, flooded, etc). Anyways, one more thing about this is that when you compare this pixel values to any information reported elsewhere, the values you are comparing to, are average values per parcel or per farm. So in this case if I show you the average interannual changes per parcel you would see something like this:

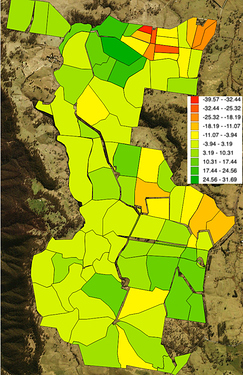

(the scale is in ton/ha, and this is the change in SOC stocks between 20017 and 2018).

So, still some incongruences in some parcels, I need to check with farmers over there, but the average SOC change for the whole farm is around 4.2 ton/ha between 2017 and 2018, and 3.8 ton/ha between 2018 and 2019 (positive values, meaning increments). So these numbers are more reasonable, and come from the same data, just the scale changes.

DK: (…) Then we’d similar scale to tons/ha as if you were using lab methods.

GB: How are you translating to tons /ha then? fixed depth, esm? where do you get BD values from, are you sensing that variable too?..

More general questions:

-

does it make sense to convert %SOC for reporting, if the error of using bulk densities + the higher cost counterbalances any error from not considering the bulk densities?? I don´t know how to get a sense of that. I will pull some results out from the sites I am working on and come back to discuss more on this topic, asap. I am thinking for instance there could be a threshold of variability of BD expected for certain soil types / bioregions or land management coupled with those, so that you could say “for these lands you can use %SOC as a proxy for SOC stocks, converting from a fixed average BD from global BD maps, and the expected error from that conversion would be less than xx %.” And so if, for instance, the range of error is between 5 and 10% but you skip the whole BD thing (sampling + costs), and you have already improved the accuracy at the parcel level by using calibrated satellite remote sensing (instead of simple interpolation from points, or modelling)… I think maybe you could leave the exhaustive BD sampling as an option for a “premium” assessment and still keep a minimum desirable accuracy

-

So, imagine we find out %SOC is more accurate than stocks for reporting, as that´s what we are actually sensing with satellite RS and on the ground sensors. Or more doable…whatever. Do you think it is even possible to skip conversion to stocks and just report %SOC for comparing among sites, temporally, compare management practices?.. from the market point of view I mean… maybe we are forced to convert.

DK: Check out conditioned latin hypercube sampling as an option for taking that data as an input and then generating a series of points that might best capture variability of b4 and, hence, SOC.

GB: great! thanks! I will!!!

Hey guys

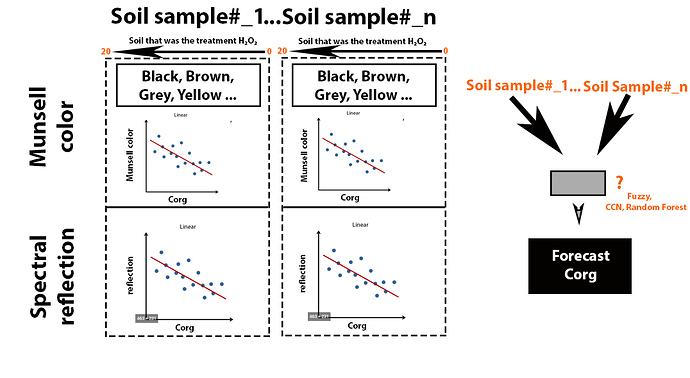

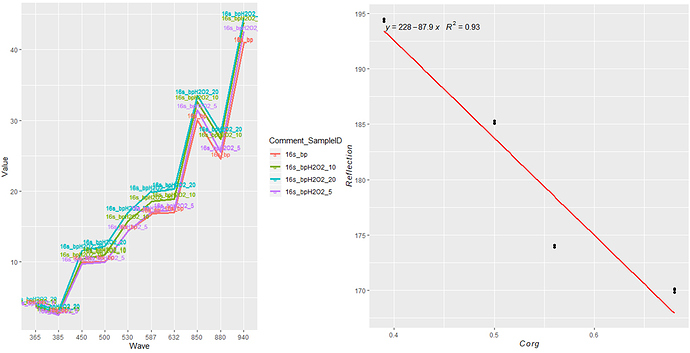

I have been starting the experiment “The dependents of soil reflection (SR) after removal of organic matter using H2O2 (35%)”.

I am planning to get a wide range of Corg’s from a single sample and get of calibration of a given soil type of field. Every soil sample, I treat H2O2 (5,10,15,20 ml / 5 g soil) and build dependency SOC-MunsellColor-SR. In perspective, I hope to get a simple regression model (lm(Corg ~ Reflection, ) and (lm(Corg ~ Munsell color, ) for each soil sample.

I don’t see the final model right now. But, I see it that way.

I would like to hear your expert opinions. Thank you.

I’m especially curious to hear @kanedan29 @plawrence and @jherrick 's thoughts on this method. It certainly would make local calibrations immensely easier if accurate, and provide a wider range of calibration possibilities than we currently have.

@AndriyHz @gbathree

Interesting approach to a challenging problem. Specific (or even soil taxonomy-specific) calibrations should help a lot. As for the H2O2 approach, I’m not sure – I would be surprised if H2O2 would remove SOM in a way resulting in the same reflectance that biological oxidation would in the field. But it might. At the risk of adding work, perhaps start with a soil (same map unit component) for which you have a range of carbon samples available, then remove using H2O2 from highest and compare? Or maybe already have done this?

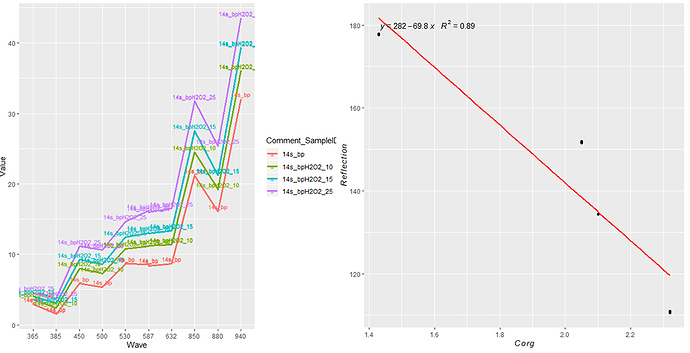

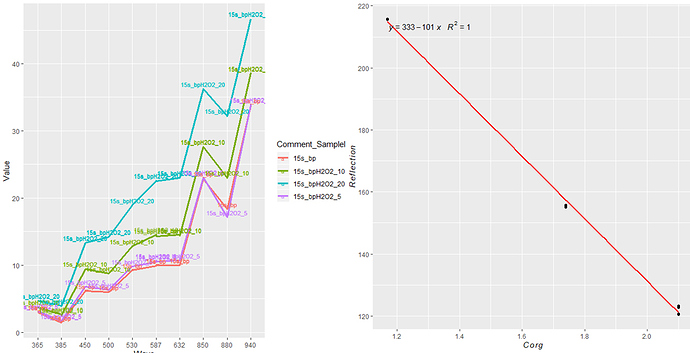

The 3 examples (in the close future it should be 20) of ag. soils that were the treatment by H2O2 (5, 10, 15, 20 ml H2O2 per 5 g soil).

In perspective, I want to get a simple regression model (lm(Corg ~ Reflection, ) for each soil sample.

Interesting. Do you have samples of the same soil with different carbon contents (e.g. in cropland and under a fence) that you could check to see if you get the same spectral signature from the naturally (tillage) and H2O2 depleted?

I’m working on it