Hi all, we have a little AI in AG group that meets every other friday. It’s open ended and a space to share what you’ve learned, and learn what others share. Our goal is for everyone to waste less time repeating the learnings of others, and maybe using similar tools and systems so we can better learn together.

Feel free to email me if you’d like to join the group, especially if you have applications to share or you’re you’re investigating ai applications in ag and you want to share what you’ve learned and vice versa!

Agenda

Intro’s

- Kirsten Simmons - Good Ag, working on ai project to do voice to

- David Peery - doing some LLM modeling to do variety trial stuff

- Rose Fontana - did NLP in the research field, genetics work with isopods (roly poly’s). LLM stuff, NLPs, for startups, for large companies, in health care and elsewhere. Getting into ag space.

- David Thomas - works for Open Food Network, works on dynamic procurement engaging larger buyers and smaller producers. dipping toes in with ai, wondering how to use it, using Beckn protocol.

- Zacchae (pron. Zaki) - does machine learning for his job, also getting more into DWeb space. Interested in Federated Learning - keep your data private but learn a shared model with those you choose.

- Kirsten - An org called Concrete Jungle was using sensors on the branches to get an understanding when the tree was ready to harvest.

Feedback on the NAPDC Group

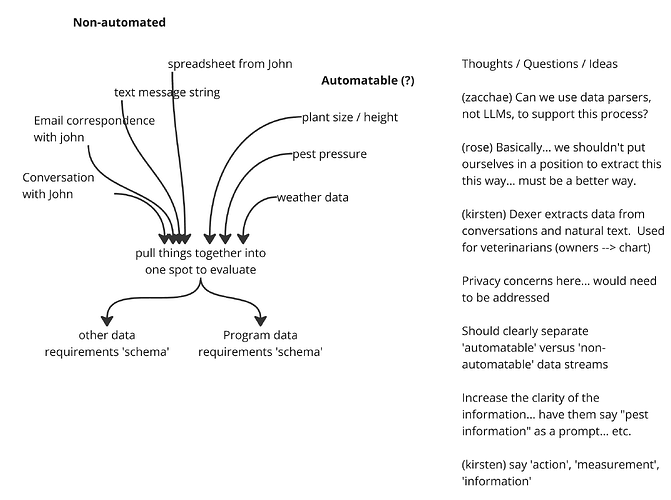

Greg shared out the gap analysis work he’s done with several groups (OpenTEAM, Pasa, CAFF, Fibershed, Mad Ag, Momentum) who were interested in investigating AI in taking diverse input data and getting it into structured data (for program participation, evaluation, etc.).

Got feedback from the group around what may make sense, ideas, etc. Put in the miro board, here’s an image.

Some highlights:

- Ag is diverse, really need to be led by users. Understanding the user end is more important than the tech

- Tagging with a limited set of tags information coming in will significantly help any tool better save the results. So instead of just saying anything, saying 'tillage: ’ and then talking about a tillage operation, or saying 'observation: ’ and giving observation information. This reduces complexity for any automated system.

Agenda for next time

- Visual learning from trail cams that don’t require human input. Pest detection, and other things.

- Let’s talk about RAG, and compare to other options